The latest generation of AI-based splat rendering plugins like World Lab’s Marble is delivering remarkable visual quality. By bypassing the traditional “Convert to Polygons” step in the GLB workflow, these tools produce exceptionally clean renders with sharper detail and fewer artifacts. The overall aesthetic quality is impressive, offering near-photorealistic results straight from the model output.

Still, there are notable limitations that affect both the production pipeline and scene interactivity. These constraints define where splat rendering technology currently stands in terms of production readiness.

1. Scene Is Not Editable

Once generated, the splat scene is static. While it is possible to layer new geometry or assets on top, the core content cannot be altered. This means elements cannot be deleted or repositioned, lighting cannot be modified, and materials and environment parameters remain fixed.

Essentially, what you generate is what you get. This restricts iterative workflows that depend on scene editing or real-time adjustment.

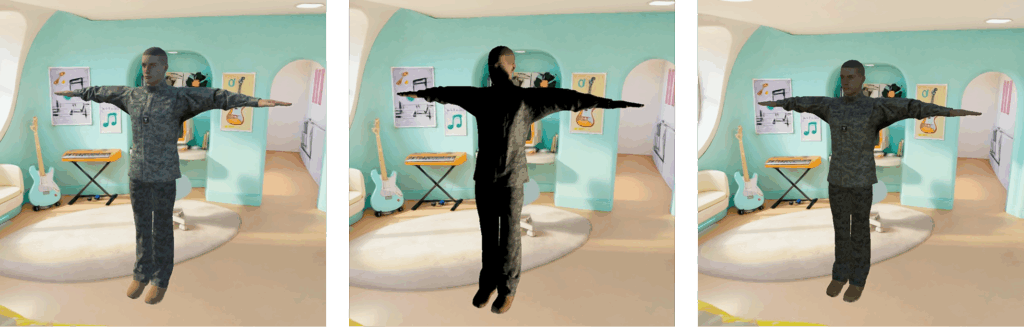

2. Hallucinations from Non-Optimal Viewpoints

From the original capture or generation angle, splat scenes appear highly realistic. However, when viewed from secondary positions, visual consistency rapidly deteriorates.

Objects can appear distorted or “melted,” and environmental elements such as corridors or furniture often blur or collapse when observed from alternative viewpoints.

This issue stems from the AI hallucination effect, where incomplete spatial information leads to unstable geometry reconstructions beyond the primary view.

3. Lighting Is Baked and Non-Interactive

Lighting within splat renders is fully baked, meaning it does not respond dynamically to new elements or scene changes. When placing Virtual Objects or Virtual Props, lighting must be manually recreated using an external rig to match the baked illumination.

4. No Shadows or Emission

Dynamic lighting interactions are not currently supported. As a result, virtual assets cannot cast shadows onto the splat environment and emissive materials and light sources do not illuminate the splat scene.

This limitation prevents realistic integration of interactive elements, further isolating the splat as a static background rather than a fully integrated 3D environment.

5. Limited Collider Integration

In traditional geometry-based Unity scenes, collider tools enable robust physical interactions between users, objects, and the environment. With splats, no true geometry data exists, only point-based representations.

To approximate collisions, developers must manually assemble cube colliders to fit the visible shapes in the space. This manual process is time-consuming, especially for complex environments, and offers limited precision.

So, Is AI Ready?

The AI Splat Renderer represents a major advancement in real-time visual fidelity. Its ability to deliver clean, high-quality renders without polygon conversion demonstrates clear potential for pre-visualization, simulation, and immersive fixed-view experiences.

However, the lack of scene editability, lighting interaction, and collider support presents significant challenges for interactive or production-level applications.

For now, splat rendering is best suited for fixed-camera simulations, immersive previews, and non-interactive visualizations where the viewer’s position remains static. Within these boundaries, the visual results can be outstanding, and a strong indicator of where real-time AI rendering may be heading next.

About HyperSkill

HyperSkill is a no-code 3D simulation platform for both Virtual and Augmented Reality. HyperSkill was created to enable instructional designers to create immersive training content without having to learn programming. HyperSkill includes thousands of 3D assets and simulations that are accessible for free to users. HyperSkill enables non-programmers to author VR/AR content, publish it to various devices and audiences and collect and visualize experience data. HyperSkill has been developed with R&D funding from the National Science Foundation. To learn more, please visit https://siminsights.com/hyperskill/

About SimInsights

SimInsights mission is to democratize the creation of 3D, interactive, immersive and intelligent (I^3) simulations. We believe that simulations are the most impactful way to learn, yet few people can actually leverage them as a creative medium. SimInsights offers HyperSkill, a no-code tool to enable everyone to capture their knowledge in the form of 3D immersive, AI-powered simulations and share it with others. The SimInsights team used HyperSkill to create Skillful, a growing collection of simulations to help anyone master any skill.